A Deep Learning Framework for Character Motion Synthesis and Editing

To appear in ACM Transactions on Graphics, vol 35(4), 2016. (Proceedings of SIGGRAPH 2016)

| Daniel Holden | Jun Saito | Taku Komura |

| The University of Edinburgh | Marza Animation Planet | The University of Edinburgh |

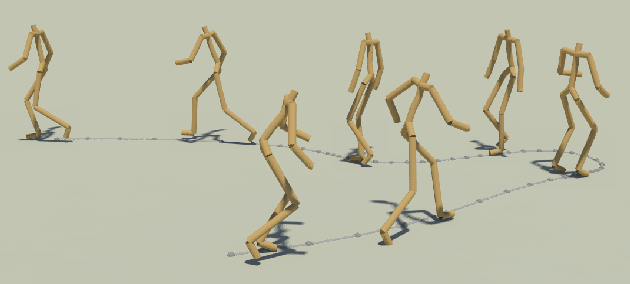

Abstract We present a framework to synthesize character movements based on the user's high-level instructions that uses a prior in the form of a convolutional autoencoder trained on a large motion capture dataset. The learned motion prior, which is parameterised by the hidden units of the convolutional autoencoder, encodes motion data in meaningful components which can be combined to produce a wide range of complex movements. To map from high level instructions to motions encoded by the prior we stack a deep feedforward neural network on top of this autoencoder. This is trained to produce realistic motion sequences from intuitive user inputs such as a curve over the terrain that the character should follow, or a target location for punching and kicking. The feedforward control network and the motion prior are trained independently so the user can easily switch between feedforward networks according to the desired interface, without making changes to the rich motion prior. Once motion is generated it can be edited by performing optimisation in the space of the motion prior. This allows for motion to remain natural, while imposing kinematic constraints, or transforming the style of the motion. As a result, the animators can easily produce state-of-the-art, smooth and natural motion sequences without any manual processing of the data. This makes the system highly practical and suitable for animation production. |

|

| [ Paper PDF ] | |

|

[ Motion Database ] |

Last updated: 30th July 2016